Problems

Images are visual representations of human experiences and the world (Molyneaux 1997). An image captures a moment of life and provides a glimpse of human history. With the overwhelming popularity of social media and the convenience of personal digital devices, digital images on web platforms have exploded in astonishing numbers. According to a recent article by an independent online news site (Kessler 2011), the number of photos on Facebook was 60 billion, compared to Photobucket’s 8 billion, Picasa’s 7 billion and Flickr’s 5 billion in early 2011. These digital images embody certain aspects of human nature in a historical scale. Challenges and opportunities abound for new findings of humanities research on digital visual media.

Many images (or photos) on social media are associated with tags that describe the content of the images. As opposed to controlled vocabularies in domain-specific taxonomy, tags are keywords generated freely without hierarchical structure and are characterized as folksonomy (Trant 2009). An image, with its visual representation of the world, can be seen as an anchor that links a set of tags. Mitchell (1994) discussed the relations of images and words and suggested the views that images and words are interactive and constitutive of representation and that all representations are heterogeneous. Therefore, images with tags are integral representations that encode fuller and richer content. This observation seems to support the assumption that, through an image anchor, meaningful linkage among tags exists.

This research focuses on the affective aspect of human life in digital images and attempts to identify a subset of tags that may indicate strong affective orientation. This provides a possibility to read into viewers’ affective response to images with certain affect indicative tags that are strongly associated with known affect types. An affective magnitude computation method is also proposed to retrieve images with strong affective orientation.

Methodology

Among the major photo web sites, Flickr provides several unique features that seem to be better fit to the purposes of this research. First and foremost, Flickr emphasizes the use of tags for image description and community activity. Flickr photos tend to be associated with more tags than other web site photos. Second, user activities on Flickr involve more direct interaction of images and words with comments and feedbacks. Third, Flickr provides APIs that are conducive to data acquisition.

This research adopts an affective framework based on Russel’s circumplex model of affect (Posner 2005), in which 28 emotion words are evenly distributed around a circle centered on the origin of a two dimensional space of affective experiences. The horizontal dimension of valence ranges from highly negative (left) to highly positive (right), whereas the vertical dimension of arousal ranges from sleepy (bottom) to agitated (top). The set of emotion words were reduced from 28 to 12 based on significant occurrence in Flickr tags and equal representation of four affective quadrants. For each affective tag, a total of 1,000 Flickr photos are retrieved based on a popularity index called interesting. The retrieving process also includes a check-and-replace step to make sure that the final sample of 12,000 photos were all unique and from unique users. This is to remove bias and achieve objective representation in data collection.

Point-wise mutual information (PMI), developed in the fields of probability and information theory, is a measure of mutual dependenceof two random variables and has been successfully applied to provide effective word association measurement in text mining research (Church & Hanks 1989; Recchia & Jones 2009). For any two words X and Y, their PMI value is calculated by taking the base-2 logarithm of the ratio of the joint probability distribution p(X, Y) over the independent probability distributions p(X) and p(Y). Maximal positive PMI values indicate strong tendency of co-occurrence, whereas negative values suggest less chance of occurring together and zero means independence. In this research, two tags co-occur if they appear in the tag set of a photo.

For the purpose of retrieving images with strong affective orientation, an affective magnitude computation method is developed. This method combines association strength, affect distribution in tag set, and community feedbacks. First, community feedback parameters such as views, favorites, and comments, are used to retrieve candidate photos with above average parameter values. Second, for each candidate photo, an affective weight is calculated by the ratio of the number of affect indicative tags in the photo’s tag set to the total number of tags in the photo’s tag set. Third, for each candidate photo, the affective magnitude of certain affective type or certain affective quadrant is computed by the weighted sum of PMI values of the pairs of the affect types and the affect indicative tags of the photo.

Results

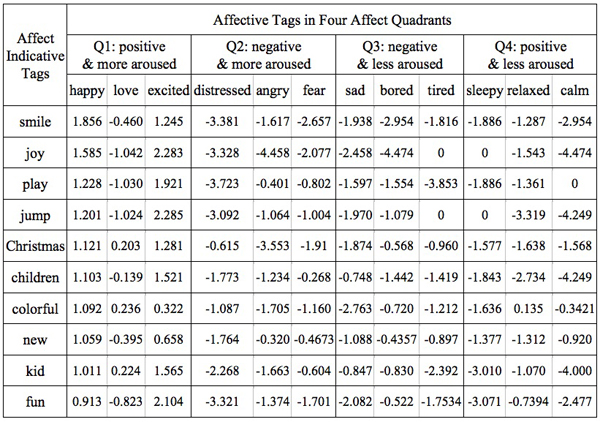

The total number of tags in the sample set of 12,000 photos is 258,293, in which 70,066 tags are unique. The frequency distribution of these unique tags shows a long tail. A stemming process is applied to these unique tags to reduce the inflected words to their root form, e.g., girls to girl, beautiful to beauti. A frequency threshold of 1% is also set to filter out less meaningful tags. The stemming and filtering processes result in 386 word forms of tags. These 386 tags are called affect indicative tags because of their high co-occurrence with the 12 affective tags. By calculating PMI values of a pair of an affective tag and an affect indicative tag, a matrix of association strength among 12 affective tags and 386 affect indicative tags are obtained. Table 1 shows a partial matrix of PMI values between affective tags and affect indicative tags.

Table 1

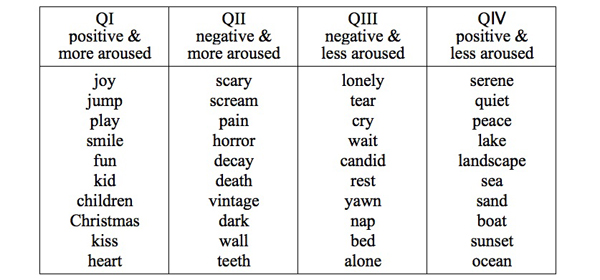

The PMI values of an affect indicative tag are further grouped by each affect quadrant to provide distinct affective orientation. The association strength of an affect indicative tag with an affect quadrant is calculated by summing up positive PMI values of the three affective tags in the affect quadrant. Negative PMI values indicate unlikely co-occurrence and are taken as zero during quadrant grouping to avoid incorrect underestimation of co-occurrence union. Table 2 shows the lists of the top ten affect indicative tags that have the most association strength with one of the four affect quadrants.

Table 2

These affect indicative tags can be used to retrieve images with predicted affective orientation. Given a set of images that include an affect indicative tag in their tag sets, the affective magnitude computation (AffMC) method is then used to determine an image’s affective magnitude in the affect quadrant, and thus provides a ranked list of images. The performance of the affective magnitude computation method is compared to that of Google and Flickr, which also include a ranking mechanism on retrieved images. Images retrieved by AffMC, Flickr, Google with top affect indicative tags of each affect quadrant are shown in Tables 3, 4, 5, and 6.

| Q1: positive & more aroused affect indicative tag: joy | |

| AffMC |

|

| Flickr |

|

|

|

*Copyrights of all images shown are reserved by original contributors.

| Q2: negative & more aroused affect indicative tag: scary | |

| AffMC |

|

| Flickr |

|

|

|

| Q3: negative & less aroused affect indicative tag: lonely | |

| AffMC |

|

| Flickr |

|

|

|

| Q4: positive & less aroused affect indicative tag: serene | |

| AffMC |

|

| Flickr |

|

|

|

To evaluate the affective magnitude computation (AffMC) method, top three affect indicative tags for each affect quadrant are used to retrieve images. The number of images retrieved by each ranking method is twelve, with three for each affect quadrant. A total of thirty-six images are given to a group of 136 subjects for testing their emotional response. Survey results indicate that AffMC is better than Flickr and comparable to Google in retrieving images of strong affective orientation. Note that image sources of Google and Flick (and AffMC) are different and Google enjoys a much significant user feedback for effective ranking.

Conclusions

This research shows that image tags can be exploited to characterize affect indicative tags. Accordingly, images with strong affective orientation can be identified and retrieved, from which viewers’ emotional response can be mostly anticipated. This method can be further developed into an engine for sorting and grouping images into affective types based on the given tags. Potential applications include intelligent visual services for user emotion enhancement or compensation. Another research implication is that significant pairs can be revealed by substantial repetition among loosely associated items and the triangular cluster of a significant pair and the central item provides the basis for functional exploitation.

Acknowledgement

This work was partially supported by the National Science Council, Taiwan, [NSC 100-2221-E-004-013]; and the National Chengchi University’s Top University Project.

References

Church, K. W., and P. Hanks (1989). Word Association Norms, Mutual Information and Lexicography,Proceedings of the 27th Annual Conference of the Association of Computational Linguistics, June 1989, Vancouver, British Columbia, Canada, pp. 76-83.

Kessler, S. (2011). Facebook photos by the numbers, Mashable. http://mashable.com/2011/02/14/facebook-photo-infographic/ (accessed 28 October 2011).

Mitchell, W. J. T. (1994). Picture Theory: Essays on Verbal and Visual Representation. Chicago: U of Chicago P.

Molyneaux, B. L., ed. (1997). The Cultural Life of Images: Visual Representation in Archaeology. London: Routledge.

Posner, J., J. A. Russell, and B. S. Peterson (2005). The Circumplex Model of Affect: An Integrative Approach to Affective Neuroscience, Cognitive Development, and Psychopathology. Development and Psychopathology 17(3): 715-734.

Recchia, G. L., and M. N. Jones (2009). More Data Trumps Smarter Algorithms: Comparing Pointwise Mutual Information to Latent Semantic Analysis Behavior Research Methods 41: 657–663.

Trant, J. (2009). Studying Social Tagging and Folksonomy: A Review and Framework. Journal of Digital Information 10(1): 1-42.